I’m the team’s newcomer. My past professional and personal experiences have involved some scraping. Personally I've used those skills in order to find my last rental and be notified as soon as possible when good classified ads are published. Professionally, I've been on the “scraper side” when working for a French banking app that gives you access to all your bank accounts. I love this topic so it was a pleasure when I saw that our roadmap involved scraping.

So why do we need scraping?

A common need of our in-house experts when handling renovation projects for our customers, is to fill information about the materials required for those projects. This is a key aspect of our onboarding process. During this process they are constrained to browse to multiple external supplier websites and open a bunch of tabs in order to gather specific page links, most likely provided by customers themselves. In a nutshell, the process goes like this: they copy/paste a product page link provided by the customer, and a sheer amount of product information back in our application: title, price, description… This process takes time and is error prone, which inherently makes it a perfect pain point that can broadly be resolved with scraping.

As a target for the first release, we aimed to support scraping for our 10 most requested suppliers. A first POC was done to prove that we knew how to get some data back to our stack, and it went... almost fine. “Almost”, because one of the supplier websites was sometimes giving us some nasty 403 errors:

“Hello Mister robot, you’re not welcome here anymore.”

Shit happens, our anti-crawler meeting

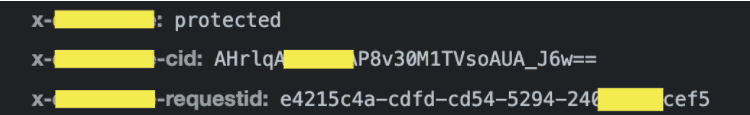

Luckily, I've met this situation before, so I was already aware of one of the possible causes. Basically, if you send exactly the same working request and you receive an anti bot response, well... you obviously have been caught: most likely you are dealing with a shield solution. Consequently, you have to be careful about all the data that you send and receive (cookies, headers...) and search for a third party name that you are not aware of. Disclaimer: you will certainly end up finding some anti bot provider. It’s only a start, but having performed this analysis will give you a clue about the anti bot solution being used.

Suspicious request header found

An example of how an anti-crawler solution defines itself

Ok, that initial analysis didn’t help much so far... so we were back to the drawing board. The “pause café” meeting was of great support to address this situation. I’ve got 6 (amazing) coworkers with their totally new IP addresses to help me. What did we learn together? When we browsed the website gently, everything went fine. However, when we refreshed the same page frenetically, we got flagged as a robot, which seemed to last for a couple of days. Eventually, when we managed to resolve the captcha, scrapping “at the speed of light” without getting flagged wasn’t a problem anymore.

I also sought to make an additional test deploying my scraper on AWS (thus exposing an AWS IP): every request was flagged as a bot. Hence, we could make the following hypothesis:

- The shield cared about our IP, and banned our IP if not trusted anymore

- The shield analyzed sequentially our requests, and banned any new weird IP behaviour

- The shield blindly trusted anyone who resolved the captcha for sometime

One solution we could have gone for was to scrap smoothly, thus delaying user calls to avoid hitting the limit of the shield. Yet, this solution didn’t fit our needs, as we wanted to have multiple requests for the same supplier site as much as we wanted a reasonable answer time. Plus, we didn’t want to fine tune these delays each time the shield changes its configuration.

How to get a clean IP in order to break the challenge

The best solution we came across consisted in resolving this challenge and having a perfectly trusted client. But, first and foremost, I needed a way to get clean IPs, whenever necessary. So I tried to gain control over my IP to test these suppositions. Using AWS wasn’t helping me here, as such shield solutions know the AWS subnet IPs, and also analyse HTTP client behaviours carefully. So my first idea was to try using a proxy. “Yeah, a new super clean working IP, I can try to do this, or that…“ Wooopsie, unfortunately I got caught again. Then came my second idea: a proxy pool! Much better idea? Now I was in the best conditions to test if there was a way to solve the challenge. For the sake of completeness, note that using TOR to get free clean IPs was also in our backlog of “ideas to try-out”.

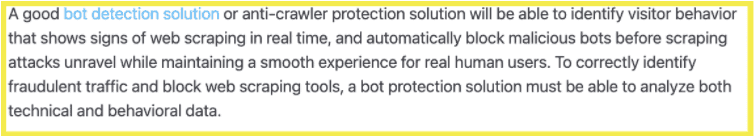

So, when the shield solution catched some suspicious IPs, they returned us a captcha challenge. This was not a homemade captcha, but a very widely used captcha, provided by a famous provider. I see that kind of captcha every day so I was pretty confident that this was a common strong solution. I tried to observe what was going on when the challenge is solved. Some requests with numbers were sent to the anti crawler provider, which returned a validation string. Then this string had to be sent to the shield solution, and then to the original website. I came across the realization that my IP could be cleaned this way...

Yet, the problem about being well known is that many people want to break your algorithm. Without a surprise, I’ve found many resources on the web, about how to break it, or about how much it will cost for someone to break it for you automatically (or eventually with real humans solving it for you). As much as I would have loved to solve this captcha, I was short on time . We thus gathered around the fire with the team, and agreed that we wanted to give this job to someone else. We weren’t prepared to maintain a captcha solver: we aren’t experts, and we don’t know how much time it would take to implement that solution.

Statistics about success rate of a captcha breaker service

A 2000 BC ad it seems

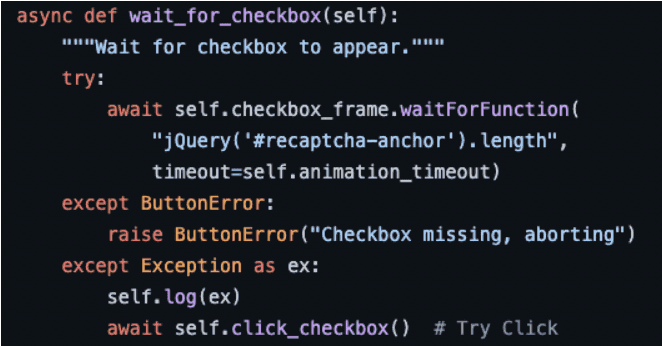

Some script extract doing the job… but for how long?

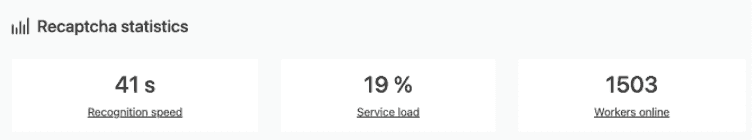

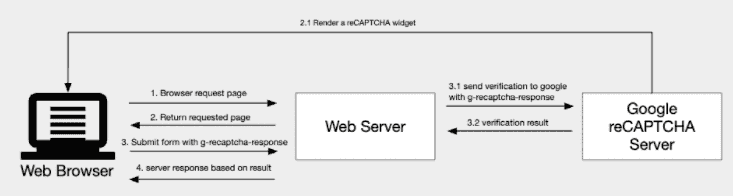

Fortunately, there were a whole lot (really a lot) of possible solutions. Many parameters needed to be benched: success rate, response time, pricing versus free trial, method (human or OCR), API, SDK, supported captchas…. What we wanted was a persistent solution, with high success rate, slow time resolution, that also looks good and has a consistently good reputation. Little explanation needed here: basically, all captcha resolvers work by exposing an API endpoint. You provide the data/text and/or image you want to resolve and get back the result of the challenge. Then you just have to submit the challenge solution to the captcha provider to get the response. Finally this response needs to be sent again as part of the cookie with the original request. Then, you’re (finally) considered trusted. Victory!

So I’ve tried a variety of solutions (at the time about four of them if I remember well). Amongst these I've found a service that offers many features, such as exposing custom proxy pool and captcha resolution only through a GET request and query params. The pricing was “Okayyy” and the success rate good enough. The proxy pool included also offered control on IP localisation, and the API endpoint was very convenient to use. With such solution, our final strategy consisted in trying to crawl any of the URLs we wanted, and if we failed to crawl it, we could just try again using that captcha resolution provider.

Common workflow to implement recaptcha

To sum-up the situation: websites pay to secure their data, scrapers pay to obtain data. We chose to give away a few bills and let both of them fight.

Optimize the process

The immense caveat was the duration of the anti captcha process though… We got the expected response data in both situations; but the no-shield scenario got us a 3 seconds response time, while the shielded one took about 15 to 20 seconds. Consider this a very long time for an average end user of ours. Of course it does take much less time than one doing the job all by himself, but it wasn’t anywhere near the blazing fast solution we imagined. Since we couldn’t improve the response time of the anti shield solution, we thought about speeding-up the process on our side: 3 or 4 seconds to get a page, wasn’t a reasonable time for a robot.

We thus started using a puppeteer from the POC, a well known headless browser, that behaves almost identically as Chrome. Those 3 seconds were coming from here. I’ve tried to disable as many options as possible while also caching instances… Well, that did the trick! Now we’re able to get the result way faster: we clock at 1,5 seconds! My puppeteer was so light and without any game changing feature that I wondered: “Is there still a reason for using it?” This was a relevant question I believe: if we take really good care about our HTTP headers and our cookies, we don’t need such a heavy solution. I decided to switch to the node-fetch library that just did what I wanted, and did it well : 0,5 seconds for a non-shielded page, 12-17 seconds for a shielded page. That’s when I told to myself : “We’ve done the job on our side”.

I got in touch with our anti shield provider to know if he can speed up his process. He tried that “something” that increased my success rate a lot: amazing buddy, we are reliable at 90% now. But, he said to me that the performance for this kind of captcha is the best they can do. me cry. So, I went on a hunt for other possible providers. Bottom line: this sheer amount of time seems to be common. As far as I knew, it seemd like an already good enough solution, so be it!

We’re almost ready to launch the feature, but we care about logs

The last but not least of these steps was to establish a really efficient logging system, while also being able to collect some metrics about success rate. There was no real difficulty here, yet we needed to be able to debug some failures easily and be warned if anything went wrong. As a failure could also happen on our side (on both website and shield sides), we ought to be in the position to keep an eye on everything and be able to follow-up any issue as quickly as possible. Some logs and a dashboard on cloudwatch gave us a primary solution. For further developments, it could be a great addition to record an image to AWS S3 of every single web page fetched when we encounter an error, or to be notified by a Slack message. This is kept very safe in my “cool ideas” cupboard for further improvements.

As of today, the feature has launched, it works pretty great, and the scraping delay doesn’t seem to hurt our users as much as we know. Eventually, it appears that the feature reasonably meets the needs of our end users. Thanks to the team's benevolent and sympathetic warnings, I was able to avoid spending too much time on speed optimization. So then again, thanks lads!

In a nutshell, this is what I’ve learned so far :

- Public data is public. We have some difficulty getting it with a basic robot today, but there are still many working solutions available.

- Don’t push yourself. The solution is not perfect but acceptable. If it was a personal project, I would have tried to break this captcha on my own. The team helped me to stay humble and accept this OK solution.