In our products, we have several use cases which rely on image uploading/displaying (user pictures, house layouts, inspiring photos...). While we provided guidelines for our users to resize their images on their end before uploading, we realized that this was not enough as many images were still displayed with their original size, resulting in heavy payloads and long display times. This is why we started to look for an elegant way to resize our images on-the-fly, right before displaying them on the frontend side.

As we are using AWS S3 to host our images, we naturally searched for documented resizing solutions compatible with that service. One blog post in particular, provided a solution of great interest: https://aws.amazon.com/fr/blogs/compute/resize-images-on-the-fly-with-amazon-s3-aws-lambda-and-amazon-api-gateway/

The proposed approach fitted nicely with our use case, with two exceptions:

- we already had our S3 bucket in production, with many uploaded images organized under several particular prefixes, and we did not want to migrate the bucket nor modify the existing images in any way

- our S3 bucket was accessed through a CloudFront distribution, adding proper domain naming and HTTPS access for our images on S3, and there again, we wanted to keep this architecture for compatibility with our existing frontends

In this blog post, we will detail what we did on top of the previous approach, in order to fit our compatibility needs.

Our design choice

Basically we had two requirements:

- our existing uploaded images had to remain accessible with their current URL for frontend retro-compatibility

- any uploaded image should be downloadable in a resized version, by adding target dimensions in the request URL, for exemple through query parameters

In the initial blog post, this was achieved by specifying the dimensions directly in the URI, inside the S3 prefix (prefix is the term used by S3 to qualify any URI part before the file name). However this meant using different prefixes for the original and the resized images, therefore changing the prefix organization of our S3 bucket, and this did not seem so elegant in our opinion.

We kept the idea of providing the dimensions in the uri, but chose to put those dimensions in the file name, instead of the prefix. For example:

- when having the original file:

/inspiration/pictures/file.jpg - the following URI would return a resized version of it:

/inspiration/pictures/file.sized-600x400.jpg

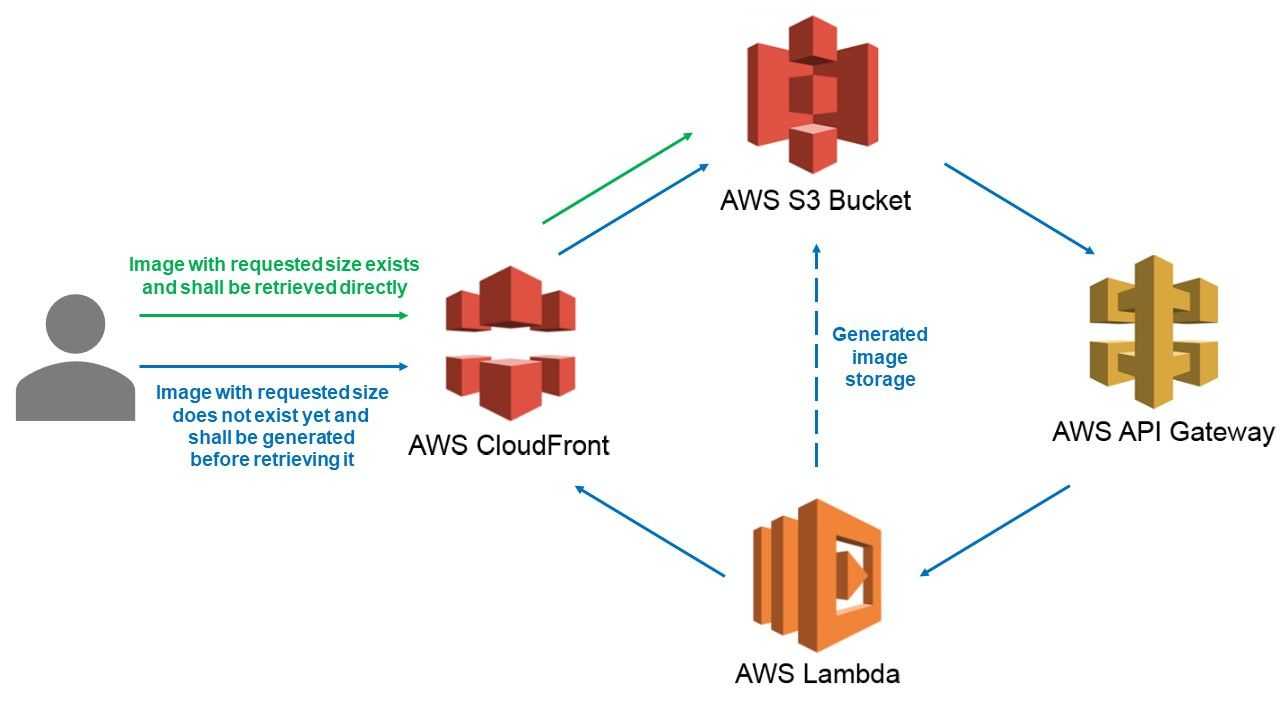

The overall implemented architecture remained the same, with the addition of the CloudFront facade:

- Whenever a resized image would be requested through a CloudFront-backed URL, CloudFront would forward the request to S3, which then would check the existence of the related file on S3.

- In case the file exists, S3 would return it immediately

- In case of a not-found file, S3 would issue a redirect request with a 301 status, to a Lambda exposed by API Gateway, therefore generating the resized image and storing it on S3.

- Finally the Lambda would issue another redirect request to the initial CloudFront-backed URL, but now pointing to the newly created, resized image.

Below is a representation of the target architecture.

Our AWS bricks and how they interact

Our AWS bricks and how they interact

The modified Lambda

Our updated Lambda code still relied on the Sharp library for the image manipulation part. The main differences were in the regex used to extract the dimensions from the URI, and the fact that all necessary parameters were now provided when calling the Lambda (instead of hard-setting the bucket or the target host in the runtime environment).

Below is the updated code that we used.

'use strict';

const AWS = require('aws-sdk');

const S3 = new AWS.S3({

signatureVersion: 'v4',

});

const Sharp = require('sharp');

exports.handler = function(event, context, callback) {

const query = event.queryStringParameters;

const protocol = query.protocol;

const host = query.host;

const bucket = query.bucket;

const prefix = query.prefix;

const key = query.key;

const match = key.match(/\.sized-(\d+)x(\d+)\.(jpe?g|gif|png)$/i);

if (!match) {

callback(null, {

statusCode: '403',

headers: {},

body: '',

});

return;

}

const [suffix, widthStr, heightStr, extension] = match;

const width = parseInt(widthStr, 10);

const height = parseInt(heightStr, 10);

const originalKeyWithPrefix = prefix + key.replace(suffix, '.' + extension);

const targetKeyWithPrefix = prefix + key;

let format = extension.toLowerCase();

if (format === 'jpg') format = 'jpeg';

S3.getObject({Bucket: bucket, Key: originalKeyWithPrefix}).promise()

.then(data => Sharp(data.Body)

.resize(width, height, { fit: 'contain', background: 'white' })

.toFormat(format)

.toBuffer()

)

.then(buffer => S3.putObject({

Body: buffer,

Bucket: bucket,

ContentType: 'image/' + format,

Key: targetKeyWithPrefix,

}).promise()

)

.then(() => callback(null, {

statusCode: '301',

headers: {'location': `${protocol}://${host}/${targetKeyWithPrefix}`},

body: '',

})

)

}Hereafter are the steps for creating, packaging and deploying the Lambda:

- on AWS, create a new Lambda, attach a role to it which can access both CloudWatch logs and your target S3 bucket(s), and expose the Lambda with an API Gateway endpoint (the original article provides guidelines for those steps)

- in your Lambda, make sure that the node version is the same as on your local environment and that you have increased the default memory and timeout values (minimum of 512mb and 10s respectively) otherwise your Lambda will not be executed properly

- on your local environment, create an

index.jsfile containing the code snippet above - run

npm install --arch=x64 --platform=linux sharpto download the sharp module - run

zip -r image-resizer.zip node_modules/ index.jsto package the files together - upload the image-resizer.zip package into your Lambda using the web console (we could also have deployed our Lambda with SAM or Serverless, but we kept things minimal in the context of this project)

S3 configuration

The steps we used to configure S3 were pretty much the same as per the initial blog post.

First step was to enable "Static website hosting" on the S3 bucket. In our case this was not enabled until then, as bucket access was previously restricted to our CloudFront distribution using its Origin Access Identity.

Then we configured the S3 redirection rules under the "Static website hosting" section, to redirect all 404 requests to our Lambda's (API-Gateway-faced) endpoint. We used the JSON rules below; note again that unlike the initial blog post, we chose to provide all the necessary Lambda parameters when redirecting to its endpoint, instead of defining environment variables at the Lambda level, so that we could potentially use this solution with different S3 buckets.

[

{

"Condition": {

"HttpErrorCodeReturnedEquals": "404",

"KeyPrefixEquals": "inspiration/pictures/"

},

"Redirect": {

"HostName": "xxx.execute-api.yyy.amazonaws.com",

"HttpRedirectCode": "307",

"Protocol": "https",

"ReplaceKeyPrefixWith": "v1/image-resizer?protocol=http&host=our-images.s3-website.xxx.amazonaws.com&bucket=our-images&prefix=inspiration/pictures/&key="

}

}

]Finally, we granted public access to the S3 bucket under the bucket's "Authorizations" section. This was required to enable S3's static website redirection feature, which by-the-way only works when using the endpoint URL with "-website", eg http://our-images.s3-website.xxx.amazonaws.com, depending on your AWS region.

For public access and redirection to Lambda to be fully operational, updating the bucket's policy also proved to be necessary. In particular, we had to add the s3:ListBucket action to allow 404 return codes instead of 403 when a resource is not found on S3:

{

"Version": "2012-10-17",

"Id": "some id",

"Statement": [

{

"Sid": "some sid",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::our-images",

"arn:aws:s3:::our-images/*"

]

}

]

}So at this point, whenever accessing an URL such as http://our-images.s3-website.xxx.amazonaws.com/inspiration/pictures/file.sized-600x400.jpg, we had either S3 returning directly an existing image, or S3 using Lambda to generate the missing image with requested size before returning it in a transparent way.

Securing with CloudFront

The final part for us was to make the whole solution work with our existing CloudFront distribution, so that we could benefit from proper domain naming and https access on top of S3.

In the previous steps we explained how we made our S3 bucket completely public, but actually what we really wanted was to grant access only to this specific CloudFront facade. So we ended up adding a new referer condition at the end of our bucket policy:

{

"Version": "2012-10-17",

"Id": "some id",

"Statement": [

{

"Sid": "some sid",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::our-images",

"arn:aws:s3:::our-images/*"

],

"Condition": {

"StringLike": {

"aws:Referer": "https://our-images.renovationman.com/*"

}

}

}

]

}On the CloudFront configuration side, under the "Origins" section, we had to update the existing Origin to use our S3 static website. In the first field "Origin domain", instead of selecting the S3 bucket in the drop-down list as the console suggested, we copy-pasted directly the S3 static website endpoint URL (in our example, http://our-images.s3-website.xxx.amazonaws.com).

Still in the Origin configuration page, we added a custom http header to match the S3 policy condition and thus allow CloudFront to access the bucket: Referer: https://our-images.renovationman.com/*

Our very final step was to update the S3 static website hosting redirection rules once again, to ensure the user lands on our CloudFront-backed host instead of S3 (which is now not accessible directly anymore) every time the Lambda generates and stores resizes images. So we updated both host and protocol parameters:

[

{

"Condition": {

"HttpErrorCodeReturnedEquals": "404",

"KeyPrefixEquals": "inspiration/pictures/"

},

"Redirect": {

"HostName": "xxx.execute-api.yyy.amazonaws.com",

"HttpRedirectCode": "307",

"Protocol": "https",

"ReplaceKeyPrefixWith": "v1/image-resizer?protocol=https&host=our-images.renovationman.com&bucket=our-images&prefix=inspiration/pictures/&key="

}

}

]Voilà. Instead of exposing S3 URLs, we were finally able to provide the on-the-fly resize feature with nice https URLs such as https://our-images.renovationman.com/inspiration/pictures/file.sized-600x400.jpg.

Some final notes

The purpose of this article was to share our own take on how to resize images on S3 dynamically together with an existing CloudFront distribution. There are still some possible improvements that you should consider for your own implementation (not covered here), such as restricting the dimensions which can be requested upon resizing, avoid transferring sensible info such as the bucket information when redirecting to our Lambda, or relying on query parameters for the resize requests to avoid modifying the original image name.